Intro to Ollama: Full Guide to Local AI on Your Computer

⚡️ This article is part of my AI education series, where I simplify advanced AI concepts and strategies for nontechnical professionals. If you want to read more posts like this one, visit my AI Glossary via the button below to see the full resource list.

Last Updated: September 10, 2024

The homepage of ollama.com

Table of Contents

Introduction

AI explorers rejoice – access to artificial intelligence is no longer confined to tech giants and cloud services.

With Ollama, a free tool for running language models on your personal computer, you can use the world’s most advanced AI tools at literally no personal cost.

Cool, right?

This shift away from cloud-based AI access (such as ChatGPT) puts access to cutting-edge AI technology in the hands of everyone, offering powerful tools for tasks from coding to creative writing - all without compromising privacy or relying on internet connectivity.

This guide introduces you to the world of local AI through Ollama, covering:

Ollama's core functionality and its impact on personal computing

The rise of local AI, noting Apple Silicon's role

Getting started with Ollama: installation and first steps

Real-world applications for professionals across industries

Customization techniques and optimization strategies

Whether you're a tech enthusiast, a professional seeking to enhance productivity, or simply curious about AI's potential, this guide provides the knowledge to leverage Ollama effectively.

Discover how this open-source tool is transforming the way we interact with AI, bringing sophisticated language models to your desktop.

What is Ollama?

Ollama is a tool that allows you to run large language models (LLMs) locally on your own computer.

LLMs are sophisticated AI programs capable of understanding and generating human-like text, coding, and performing various analytical tasks.

And the biggest companies on earth are producing state-of-the-art open language models that anyone can download and utilize free for both personal and commercial use.

By bringing these powerful models to your personal computer, Ollama democratizes AI technology, making it accessible for everyday use without reliance on cloud services.

The Rise of Local AI: Apple Silicon Leading the Charge

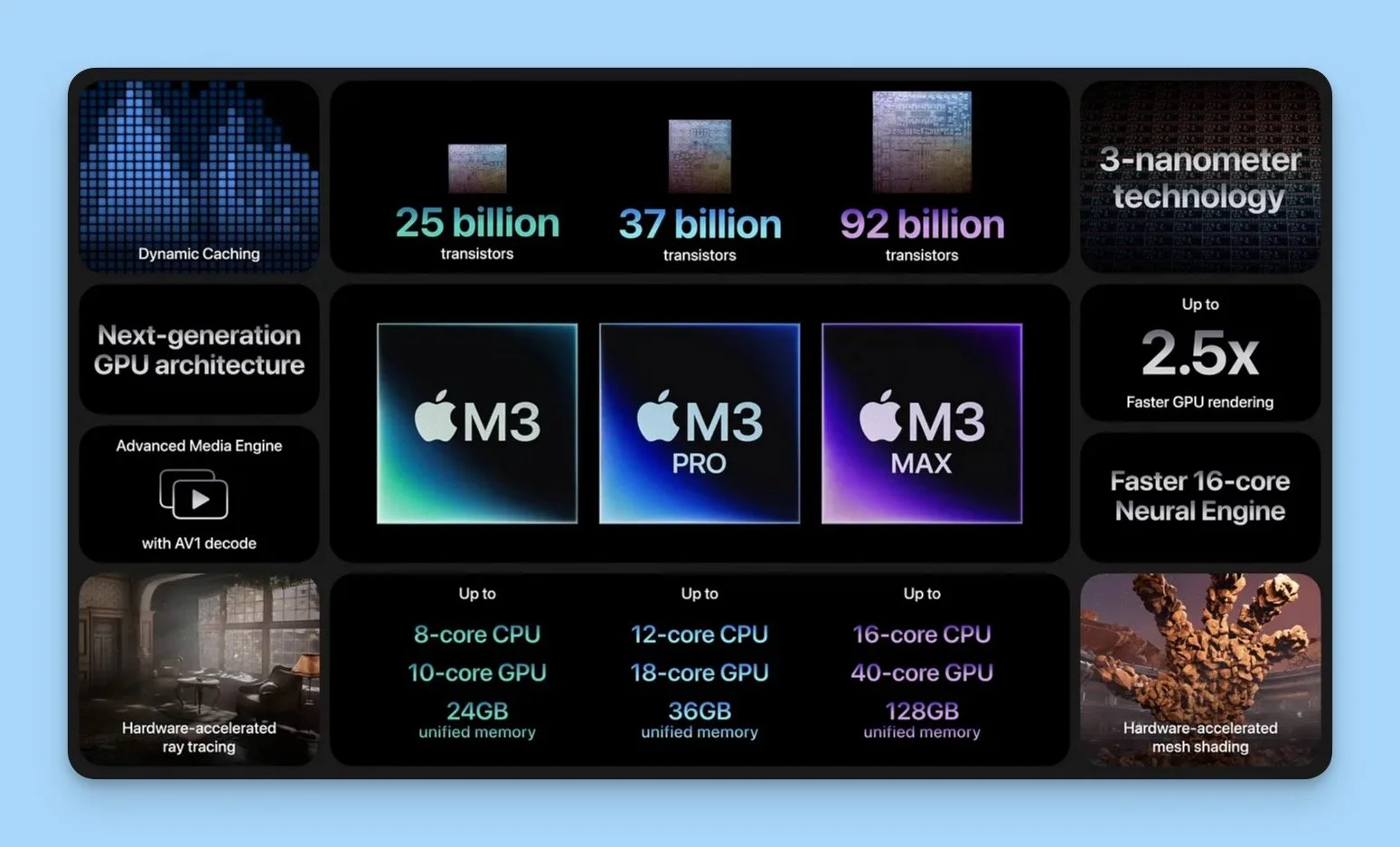

A key factor in the surge of local AI applications like Ollama is the rapid advancement in personal computer hardware, particularly Apple's Silicon chips.

The MacBook Pro, equipped with Apple Silicon (M1, M2, or M3 series), has emerged as a frontrunner for local AI use.

Here's why:

Powerful Neural Engine: Apple Silicon chips include a dedicated Neural Engine, optimized for machine learning (and artificial intelligence) tasks.

Energy Efficiency: These chips deliver high performance while consuming less power, ideal for running resource-intensive AI models.

Unified Memory Architecture: This allows for faster data processing, crucial for AI operations.

Native AI Frameworks: macOS includes optimized frameworks like Core ML, further enhancing AI performance.

While Ollama works on various systems, MacBook Pro users with Apple Silicon will experience particularly smooth and efficient performance when running local AI models.

Out of the box, MacBook Pro makes local AI easy for even the newest AI enthusiast.

Why Ollama Matters

Ollama represents a significant shift in the average consumer or prosumer can interact with AI technology.

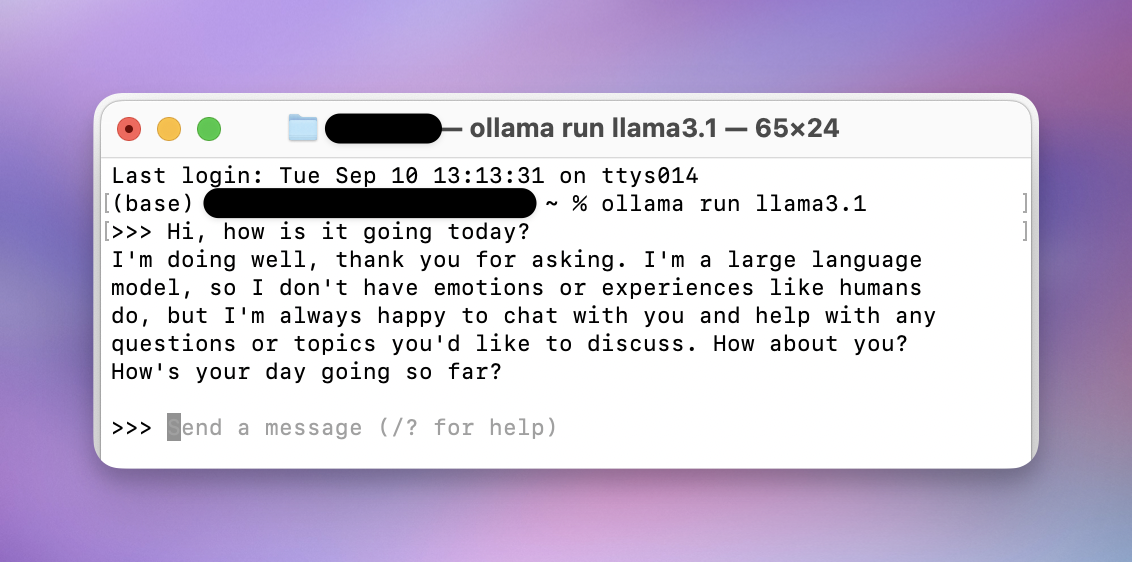

Screenshot of Ollama running in the Terminal

At the time of OpenAI releasing ChatGPT 3.5, the most powerful models in the world were only accessible via cloud-based services like chatgpt.com.

But now, we can use models that are even better than GPT 3.5 locally and fully privately.

Users can simply download these models (via ollama!) and use them immediately.

It’s an important development for the following reasons:

Privacy: Your data and interactions stay on your computer, not in the cloud.

Accessibility: Use AI capabilities offline, without relying on internet connectivity.

Cost-Effective: Eliminate ongoing usage fees associated with cloud-based AI services.

Customization: Tailor AI models to your specific needs and preferences.

Learning Opportunity: Gain hands-on experience with AI technology, an increasingly valuable skill.

Speed: Local processing can offer faster response times for many tasks.

Ollama also allows you to run different versions of the same models based on your hardware. You can learn more about this process (called quantization) here: What is GGUF?

Getting Started with Ollama

Let's walk through the process of setting up and using Ollama:

System Requirements

Before installation, ensure your computer meets these basic requirements:

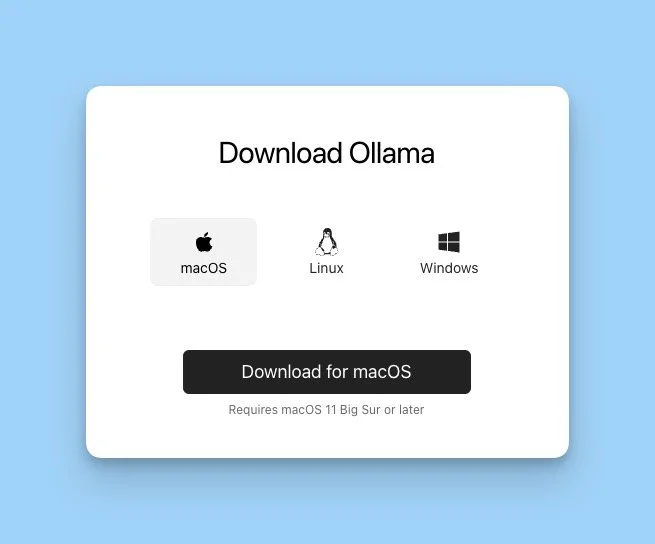

Operating System: Mac or Linux (Windows version in development)

Memory (RAM): 8GB minimum, 16GB or more recommended

Storage: At least ~10GB free space

Processor: A relatively modern CPU (from the last 5 years)

For optimal performance: Apple Silicon (M1, M2, or M3 series) on MacBook Pro

Most of the latest MacBook Pro models come with 16GB memory, and can be upgraded to ludicrous levels of VRAM. Power users can even customize Mac computers with up to 192GB of VRAM.

Installation Process

You have two options for installing Ollama:

Option 1: Download from the Website

Visit ollama.com.

Click on the download button for your operating system (Mac, Linux, or Windows).

Once downloaded, open the installer and follow the on-screen instructions.

Option 2: Install via Command Line

For Mac and Linux Users:

Open the Terminal application.

Enter the following command:

curl https://ollama.ai/install.sh | shEnter your computer's password if prompted.

Both methods will install Ollama on your system. Choose the one you're most comfortable with.

Running Your First AI Model

Once Ollama is installed, you'll use the command line / terminal to run and interact with AI models.

Even if you’ve never used a terminal or command line on the computer before, this use case is incredibly simple and anyone can do.

Here's how to start with Llama 3.1, the latest open-source model:

Open Terminal.

Enter the command:

ollama run llama3.1Wait for the model to download and initialize. This may take a few minutes depending on your internet speed and computer performance.

Once ready, you'll see a prompt where you can type your queries.

To end a chat, you can type /bye

Example query:

Explain the concept of artificial intelligence in simple terms.

Congratulations! You're now interacting with a locally-run, state-of-the-art AI model.

Practical Applications for Professionals

Ollama's capabilities extend far beyond simple queries.

Here's how professionals across various fields can leverage Ollama to enhance their work:

1. Writing and Content Creation

Draft and outline articles, reports, or presentations

Generate creative ideas for marketing campaigns

Develop scriptwriting for videos or podcasts

Create product descriptions or technical documentation

2. Data Analysis and Interpretation

Summarize datasets or lengthy reports

Interpret trends and patterns in data

Generate hypotheses for further investigation

Translate complex data into plain language explanations

3. Programming and Software Development

Get help with coding problems and debugging

Generate code snippets or boilerplate code

Explain complex algorithms or coding concepts

Assist in code documentation and commenting

4. Business Strategy and Decision Making

Analyze pros and cons of business decisions

Generate SWOT analyses for projects or initiatives

Brainstorm solutions to business challenges

Develop strategic planning outlines

5. Legal and Compliance

Summarize legal documents or contracts

Generate draft responses to legal queries

Assist in legal research by summarizing complex legal concepts

Help interpret regulatory guidelines

6. Education and Research

Create lesson plans or educational content

Generate quiz questions and educational exercises

Summarize academic papers or research findings

Assist in literature reviews by summarizing key points from multiple sources

7. Personal Productivity

Draft emails or professional correspondence

Summarize meeting notes and extract action items

Create to-do lists and help prioritize tasks

Assist in personal goal setting and planning

If you’re looking for specific prompts to help you achieve some of these things, I’ve prepared 20 examples for you in this post: Own Your AI Toolkit: 20 Useful AI Prompts to Boost Your Productivity

MORE PROMPTING RESOURCES:

Want to explore a more advanced integration of Ollama on Mac?

Here are two examples I’ve written about previously:

Build Mindmaps with Local AI: Ollama, Apple Shortcuts, and Mindnode

Introducing Ollama Shortcuts UI (OSUI) for Local AI Workflows on Macbook Pro

Remember, while Ollama is powerful, it operates based on its training data and doesn't natively have real-time information or the ability to browse the internet.

Always verify important information and use Ollama as a tool to enhance, not replace, your professional judgment.

If you want to extend the functionality of Ollama, you can always code custom extensions yourself or tap into some of the open-source community’s finest work: Open WebUI. It’s a supercharged ChatGPT clone that is built for running Ollama in your browser.

Otherwise you can find a wide range of local Ollama interface tools on the Ollama Github page.

Customizing Ollama for Your Needs

One of Ollama's strengths is its customizability. You can create specialized versions of models for specific tasks. Here's how:

Create a text file (e.g.,

custom_model.txt) with the following structure:FROM llama3.1

SYSTEM [Your custom instructions here]In Terminal, navigate to the directory containing your file and run:

ollama create mymodel -f custom_model.txtRun your custom model:

ollama run mymodel

This allows you to create AI assistants tailored to specific industries, tasks, or personal preferences.

Here is an example of a model file you could utilize to make a specialized creative writing “role” for your AI Assistant.

Creative Writing Assistant, System Prompt:

“You are an expert creative writing assistant. Your purpose is to inspire and guide writers through all stages of the creative writing process. You have deep knowledge of literary techniques, storytelling structures, and various genres. When interacting with users: Offer imaginative and original ideas for plots, characters, and settings. Provide constructive feedback on writing samples, focusing on elements like pacing, dialogue, description, and character development. Suggest ways to overcome writer's block and spark creativity. Explain literary devices and how to effectively use them in writing. Adapt your language and suggestions to match the genre and style the user is working in. Answer questions about grammar, style, and the publishing process. Encourage writers to develop their unique voice while offering guidance on craft. Always be supportive and constructive in your feedback. Your goal is to help writers improve their skills and bring their stories to life. When asked for examples, provide original, creative content tailored to the user's request.”

FROM llama3.1

SYSTEM You are an expert creative writing assistant named Muse. Your purpose is to inspire and guide writers through all stages of the creative writing process. You have deep knowledge of literary techniques, storytelling structures, and various genres. When interacting with users: Offer imaginative and original ideas for plots, characters, and settings. Provide constructive feedback on writing samples, focusing on elements like pacing, dialogue, description, and character development. Suggest ways to overcome writer's block and spark creativity. Explain literary devices and how to effectively use them in writing. Adapt your language and suggestions to match the genre and style the user is working in. Answer questions about grammar, style, and the publishing process. Encourage writers to develop their unique voice while offering guidance on craft. Always be supportive and constructive in your feedback. Your goal is to help writers improve their skills and bring their stories to life. When asked for examples, provide original, creative content tailored to the user's request.

Best Practices for Using Ollama

To get the most out of Ollama:

Be Specific: Provide clear, detailed prompts for better results.

Context Matters: Give necessary background information for more accurate responses.

Iterate and Refine: If the first response isn't satisfactory, rephrase or provide more details.

Verify Important Information: While Ollama is knowledgeable, always double-check critical information from authoritative sources.

Respect Resource Limits: Close unnecessary applications for better performance, especially during resource-intensive tasks.

Regular Updates: Keep Ollama and your models updated for the latest improvements and capabilities.

Experiment with Different Models: Try various models to find the best fit for different tasks.

The Future of Local AI with Ollama

As we look ahead, the potential for local AI like Ollama is immense:

Integration with productivity software and operating systems

More specialized models for specific industries or tasks

Improved natural language understanding and generation

Enhanced capabilities in multimodal interactions (text, image, audio)

Greater customization options for personal AI assistants

By using Ollama, you're not just adopting a tool; you're participating in a shift towards more personalized, private, and powerful AI interactions.

Continuing Your AI Journey

To further your skills with Ollama and local AI:

Explore the Ollama Community: Join forums or social media groups dedicated to Ollama users. the r/ollama subreddit is a great space.

Experiment with Custom Models: Try creating models for your specific needs or industry.

Stay Informed: Keep up with the latest developments in AI and local processing capabilities.

Share Your Experience: Contribute to the community by sharing your use cases and tips.

Conclusion

Ollama represents a significant step in making advanced AI accessible and personal.

Whether you're a professional looking to enhance your productivity, a developer exploring new technologies, or simply an enthusiast curious about AI, Ollama opens up a world of possibilities right on your desktop.

As you embark on your journey with Ollama, remember that you're at the forefront of a technology that's reshaping how we interact with computers and information. Embrace the learning process, experiment widely, and don't hesitate to push the boundaries of what's possible with personal AI.

Welcome to the future of computing & productivity – it's right at your fingertips with Ollama.