The Shape of Thought: Knowledgespace

On This Page

Preamble: In this post I introduce a theory for the geometry of knowledge in static embedding spaces, along with some early thoughts on AI as a collaborative epistemic navigator. This is partially inspired by Peter Gärdenfors’ The Geometry of Meaning.

In the AI field I would label myself a self-taught scientist. My research and explorations generally follow my natural curiosity and intuition with AI. I hope this exploration sparks your curiosity as it did mine.

A Journey Into AI

The emerging frontiers of AI are a fascinating place for exploration and discovery. The nature of AI models as cognitive mirrors is an infinite font of curiosity for me.

Even though I’m relatively new to the field (early 2022), I’ve always been a cross-domain connector who bridges insights from different disciplines to come up with new ideas.

The particular connections I’m seeking to outline here (between knowledge, physics, geometry, and epistemology) feel exciting to me, and I’m hopeful they can spark discourse around the nature of knowledge in the AI era.

Let me also caveat that I’m not an academic. I don’t have a masters or a doctorate in what I’m talking about here. I’ve read a lot of research, run several experiments (mostly noted in my blog here), and my aim is to label some patterns I’m seeing.

Here goes.

Knowledge as a Geometric Manifold

Let me start by saying that I love innovation. And in the context of our current AI boom, we have entered an era where competitive advantage is no longer solely determined by access to information. Intelligence is no longer the bottleneck. Rather, the ability to go deeper into and navigate the hidden structures of knowledge itself is becoming a primary means of capturing the advantage in an industry.

In this post I briefly introduce Knowledgespace — an AI-native framework that presents knowledge as a high-dimensional, navigable topology, and positions AI as a tool for unlocking the potential discoveries in this latent space.

The prevailing idea of knowledge is that it’s essentially a collection of facts and an awareness of those facts. But there are others who think knowledge may actually geometric / spatial, like a manifold – a complex topological space with its own internal geometry.

Peter Gärdenfors work on The Geometry of Meaning speaks directly to this idea.

The conceptual space for “Color” as modeled by Peter Gardenfors, used as an example of spatial models of cognition.

If we consider knowledge in this way, especially in the context of mapping the minds of AI models, then frameworks from physics and geometry become useful for mapping and measuring the knowledge contained in the embedding spaces and neural networks of AI models.

Imagine a realm where ideas, concepts, and innovations are not randomly scattered points, but are woven together in an intricate, multi-dimensional tapestry. A place where the distance between thoughts is not measured in pages or documents or hyperlinks, but in semantic similarity and conceptual proximity.

This is Knowledgespace. It’s not uniform or flat. It has peaks and valleys, dense clusters and wide open spaces. Regions where established ideas dominate, and uncharted territories where the next great breakthroughs lie waiting to be discovered.

The Hyper-Dimensionality of Meaning

Human understanding of physical dimensions generally caps out at four dimensions — 3D space, plus time = 4D. That’s spacetime.

So trying to get our minds around the idea of high-dimensional spaces (like Knowledgespace) can be a challenge.

For example, when I first learned that OpenAI's embeddings model text-embedding-3-large has 3072 dimensions, it blew my mind.

An embedding for the concept “dog” in this model would have 3072 dimensions annotated like this -> [0.73172706, 0.3679354 , 0.03509789, 0.78527915, 0.82310865, 0.68471897, 0.68326835, 0.54179226, 0.42172536, etc.].

(Not every embeddings model uses this many dimensions, by the way. Using less dimensions simply reduces the fidelity of the mapping in embedding space. Typically this trade-off has an incremental impact on quality, though it varies depending on the domain.)

I tried to conceptualize that level of dimensionality in my head. The closest I got was finding Chernoff Faces as a method for visualizing higher dimensional data, where each part of a face represents a variable/dimension in a data set.

Example from Wikipedia

I tried abstracting this to the idea of a “Chernoff House” (like a strange method of loci variant) to conceptualize more dimensions, but even through creative representations it was impossible for me to grasp a 3072-dimensional thing in my head.

Realizing that we can map language and meaning like this (numerically) was the first time it hit me how multifaceted knowledge truly is.

And this is why studying cognition and knowledge through LLMs and AI is so fascinating to me. We can use the mind of a model as a proxy for exploring and mining the hyper-dimensional connections we can’t perceive in our own personal knowledge as well as the collective knowledge of humanity.

AI as a Navigator of Knowledgespace

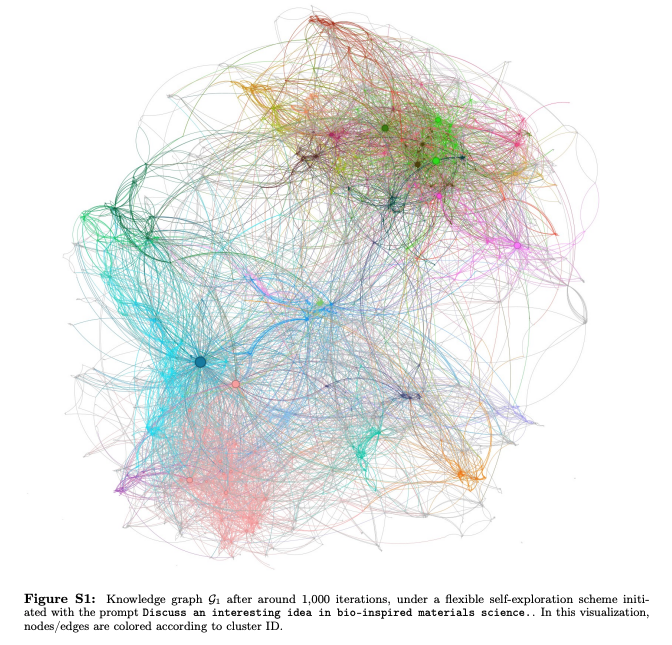

Through my graph-based and ontology-based explorations in building AI-powered autopoietic digital gardens, I got my first look at the “shape” of knowledgespace by letting different LLMs perform long-running open-ended semantic explorations when given a seed topic.

This recent publication from Markus Buehler at MIT (Agentic Deep Graph Reasoning Yields Self-Organizing Knowledge Networks) explores a similar approach and finds that autonomous agents tasked with material science exploration will build self-organizing knowledge frameworks over time, generating novel connections between disparate topics independently.

My intuition is that the knowledge these agents are organizing is first being mined from a local Knowledgespace manifold created by the LLM’s training data and training process, which is itself an imperfect projection of a global Knowledgespace, a mathematical ideal which theoretically contains all knowledge known and unknown.

On a curved surface, the shortest path between two points is called a geodesic. In an LLM’s local Knowledgespace, we can potentially say that the autopoietic work of our AI agents is achieved by finding semantic geodesics between concepts and mining the latent space along those paths for new discoveries.

Although it’s not yet possible to generate new text by simply decoding embedding vectors in an embeddings model, (i.e. we can’t fabricate an embedding that doesn’t exist and translate it into text), we can functionally use transformer & embedding models in tandem to decode these conceptual geodesics through a process of generating text → embedding text → mapping where it is in embedding space → hypothesizing how to move the next generation closer to the geodesic in embedding space → generating again.

Today's best AI models do much more than regurgitate training material; their training process encodes both surface level and deeper connections between concepts in a high-dimensional vector space.

And since the “neural composition” of these models is static once trained, they afford us a means to externalize these connections in more consistent and scalable ways than humans ever could, using processes like the one noted above and the ones I outline later in this post.

Research Evidence: Different Models, Same Space

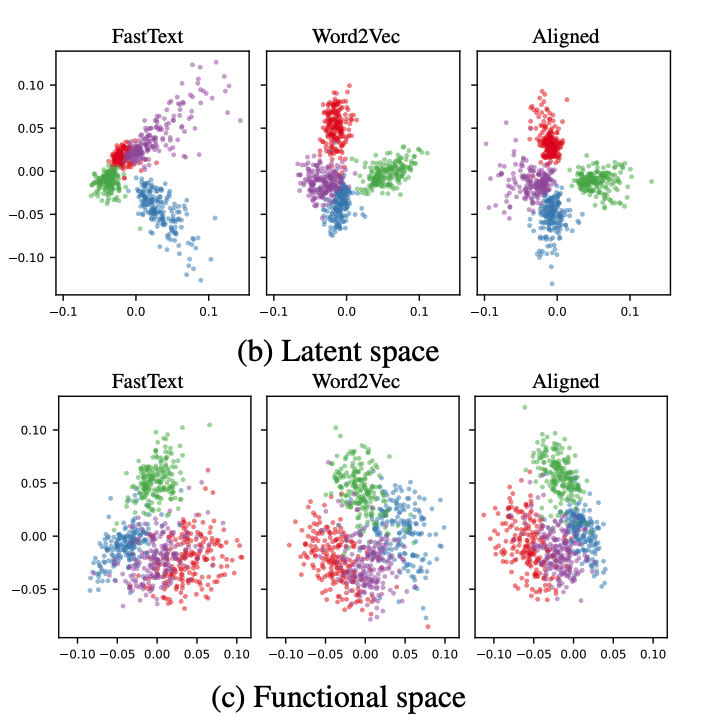

Recent research, ex. Latent Functional Maps (LFM), demonstrates that different neural networks, even when trained on different data with different architectures, learn representations that can be aligned by building a map of functions that work for both networks.

Source, figure 6 (b) and (c)

The fact that LFM can successfully align these different representations without excessive computation (even zero-shot in some cases) suggests that there is indeed some common/global structure to knowledge that different models are approximating.

And the specific mathematical approach used by LFM, spectral geometry, further suggests that the intrinsic geometry of the manifold is what matters, not just specific embeddings.

Combining this research with my own experience leads me to a conclusion that I noted above: each AI model’s mind map is a unique imperfect projection of pure Knowledgespace.

In this context, we might consider global Knowledgespace as a “mathematical ideal”. I see it as the space that encompasses all knowledge, known and unknown, and rather than creating new knowledge we are instead discovering undiscovered pre-existing knowledge.

Philosophically this perspective can become a bit contentious, as this particular debate extends all the way back to Plato’s Theory of Forms. I’m still debating this aspect myself.

Locally though, each model has its own local / submanifold representation of Knowledgespace that we can explore and interact with, e.g. interacting with GPT-4o is interacting with the local Knowledgespace embodied in GPT-4o’s neural network.

Implications for Discovery and Innovation

Through digestig these ideas, I can see potentially profound implications unfolding.

One of the biggest is that if what I’m proposing here can be demonstrated mathematically, then discovery may not be the random, serendipitous process we often imagine.

It could in fact be that the emergence of new technologies, market trends, and competitive shifts follows structured pathways within this high-dimensional landscape of knowledge.

In my exploration, I've come to view AI not just as a tool for analyzing data, but as a cartographer and navigator of this conceptual terrain.

It can help us identify areas of high potential, predict future developments, and uncover latent connections that might remain invisible to human perception.

The Penumbra: Where Innovation Happens

What excites me most here is exploring what I call the "penumbra" – the boundary zone between the known and the adjacent possible. This is where I believe disruptive innovation truly emerges.

In my own work, I've found that the most valuable insights rarely come from well-trodden territories but from these liminal spaces.

I've also been fascinated by how observer-dependent this space is, (in the quantum physics sense of the word).

Just as different map projections distort physical reality in different ways, different AI models create different views of Knowledgespace. Additionally, user interactions (such as a chat) with model-specific Knowledgespaces will also, in a sense, produce observer-dependent distortions.

I've been thinking about how integrating these perspectives might give us a more complete understanding of the big picture.

Toward a Mathematical Framework

To give this intuition some rigor, I'm working on a mathematical framework that includes tools like the Bryan Metric Tensor to quantify these relationships and allow us to measure semantic distances, identify high-curvature regions, and predict the trajectory of knowledge evolution.

In physics, a metric tensor is a sophisticated mathematical tool that provides a way to measure geometric properties in curved spaces. It’s a bit more abstract in such high-dimensional spaces, but I think there are ways to land the plane.

And as theoretical as this may seem, I've been encouraged to see research demonstrating the power of both semantic and geometric approaches to knowledge mapping. Researchers have shared some exciting findings and implications in papers such as the ones previously mentioned. Two additional ones worth reading are Predicting research trends with semantic and neural networks and Zero-Shot Generalization for Causal Inferences.

We're moving beyond simple "search" of pre-existing knowledge bases to something that feels more like true navigation and discovery in latent space.

The Strategic Frontier

The implications for how we innovate in this paradigm feel profound to me.

Organizations that learn to understand, navigate, and build extractive systems for Knowledgespace are likely to gain remarkable advantages – anticipating market shifts before they become obvious, finding non-obvious connections between seemingly unrelated fields, directing resources toward the most promising areas, and gaining deeper insights into competitive positioning.

I see Knowledgespace as a new frontier for exploration. The individuals and organizations that learn to map it, explore it, and understand its hidden structure might well be the ones who define our collective future.

I'm currently working on a more comprehensive paper introducing this theory of Knowledgespace and a suite of accompanying terms and concepts, and I can't wait to share it when it's ready. If these ideas resonate with you, I'd love to hear your thoughts and perspectives as I continue this journey.