Knowledge Diffusion: A New Paradigm in LLM Information Extraction

On This Page

Introduction

In my recent explorations of Large Language Models (LLMs), I've stumbled upon what I believe to be a significant breakthrough in how we can extract and organize information from these powerful AI systems.

This article introduces the concept of knowledge diffusion, a novel framework for AI-driven knowledge extraction that I've been developing and testing.

It's an approach that could revolutionize our interactions with AI and our methods of research and personalized learning.

The Concept of Knowledge Diffusion

Knowledge diffusion, as I've conceived it in the context of AI and LLMs, represents an innovative approach to information extraction and organization.

This process leverages the vast knowledge embedded in LLMs to create a multidimensional, interconnected web of information.

The naming of this approach is inspired by Stable Diffusion, an AI image generation process where an image is created from a “seed” of noise and then gradually de-noised to produce a finished image based on a user’s prompt.

Unlike traditional methods of information retrieval, which often yield discrete pieces of information, knowledge diffusion starts with a seed topic and expands outward in multiple directions simultaneously, creating a rich tapestry of interrelated concepts.

What sets knowledge diffusion apart is its ability to leverage the AI's vast knowledge base to make connections that might not be immediately apparent to humans.

It's not constrained by typical search patterns or predefined categorizations. Instead, it can traverse domains, identifying relevant links and enriching our understanding in unexpected ways.

This process is iterative and self-refining. As the knowledge base grows, the AI doesn't just add new information; it continually revisits and refines existing content, strengthening connections and deepening the overall understanding of the topic at hand.

Experimental Implementation: The AI-Powered Digital Garden

To explore the potential of knowledge diffusion, I recently developed an AI-powered digital garden generator that populates an Obsidian vault with topical notes written by AI.

This system uses LLMs to create and cultivate a network of interconnected notes on any given topic, autonomously expanding and refining the knowledge base.

It’s a clever application of agentic AI, leveraging multiple AI models asynchronously to generate, expand, and refine a knowledge structure.

In a recent experiment, I let this system run for an hour on the seed topic "How To Make Delicious Pizza", powered by Mistral NEMO 12B running locally on my Macbook Pro.

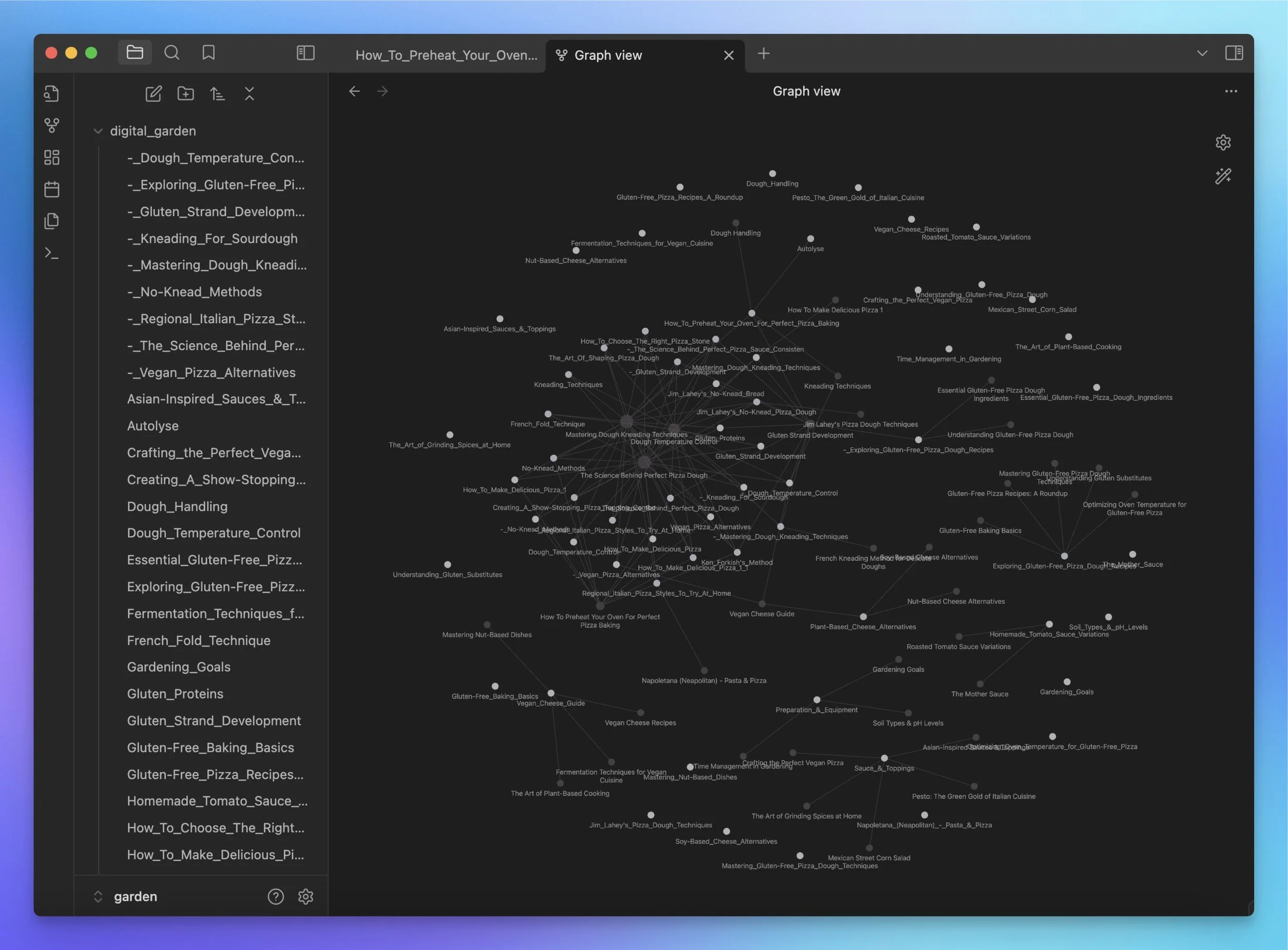

The results were remarkable: it generated 62 markdown files, each interconnected with up to 5 other files based on semantic relevance.

You can see these connections in the screenshot of my Obsidian knowledge graph above.

The knowledge diffusion process was executed as follows:

Generation of an initial seed file on the core topic.

Expansion from the seed, creating new files on semantically relevant subtopics.

Iterative refinement, where the AI references and integrates both its internal knowledge and the previously generated content.

Establishment of internal links between files based on semantic relevance.

Repetition of steps 2-4 to achieve desired depth and breadth.

Everything is saved and mapped in a final knowledge graph

This experiment demonstrated the potential of knowledge diffusion to rapidly create a rich, interconnected knowledge base on a given topic.

The Unique Value of AI-Generated Knowledge Structures

Through my work with this system and my frequent collaboration with LLMs, I've come to realize that the value of this approach extends beyond simple information aggregation.

By leveraging the inherent structure of LLMs, we can extract knowledge in a form that is qualitatively different from traditional human-organized information.

LLMs process information in ways that mirror complex neural networks, a structure that we humans find challenging to replicate manually due to our cognitive limitations.

When we extract information from these models using the knowledge diffusion framework, we obtain a knowledge structure that is semantically and ontologically richer than traditional knowledge bases.

For instance, in my pizza-themed knowledge base, I found unexpected connections, such as detailed dough-making techniques from renowned bakers, seamlessly integrated into the broader context.

This level of detail and interconnectedness is rarely found in conventional, manually curated information sources.

Implications for Research and Knowledge Work

As I've reflected on the potential applications of this technology, I've become increasingly excited about its implications.

Imagine a personalized, adaptive knowledge base that continually expands based on your interests. This could seriously alter how we approach things like personal learning, academic research, and professional development.

Moreover, this approach aligns with a key strategic consideration in the age of AI: the importance of comprehensive foundational knowledge.

Drawing from ancient Greek philosophy, we can consider the concepts of episteme (common knowledge) and phronesis (practical wisdom).

Knowledge diffusion could serve as a powerful tool for rapidly establishing episteme, freeing human cognitive resources for the development of specialized proprietary knowledge.

In my view, by providing a comprehensive view of "what everyone knows" on a given topic, knowledge diffusion creates a solid foundation for innovation and strategic thinking.

It allows individuals and organizations to quickly establish a baseline understanding, identifying areas of consensus and potential gaps in current knowledge.

I'm particularly intrigued by the potential for comparative analysis between different LLMs using this framework. Observing how different models diffuse knowledge on the same topic could be a unique source of insight.

Conclusion

From my perspective, knowledge diffusion represents a significant shift in how we might interact with and extract valuable structured knowledge from LLMs.

By moving beyond simple question-answering to the creation of rich, interconnected knowledge structures, we open new possibilities for learning, innovation, and knowledge work.

We also unlock new ways to dynamically create personalized knowledge graphs and ontologies on the topics that most interest us.

Adding steerability and more human-in-the-loop aspects to the system could prove to be powerful adaptations to this system as well.

I’m keen to continue researching in this area, and I invite you to reach out to me (via my contact page or on LinkedIn) for further discussion if this is of interest to you as well.

Thanks for reading.